Lewa

Member

Recently i upgraded to a new PC as my (now former) PC build was getting pretty slow to work with due to (for todays standards) ancient hardware.

Now, my old PC build was using an Nvidia GPU (Geforce 9600GT with 512 MB GDDR3 Vram)

So for the last 3 years i was working on my project and (pretty much) exclusively testing it on my Nvidia GPU.

I was also able to test the game on my laptop which had an IntelHD GPU.

None of my rigs had an AMD GPU. Until now.

My current PC build has a Radeon RX 480. And i noticed a very big issue with it.

I'm not sure why this is the case, but shaders seem to calculate values at a lower precision (in particular in the fragment shader) on AMD hardware.

I made a video showcasing this issue:

Now, my suspicion is that the variables which are passed from the vertex to the fragment shader are being interpolated at a lower precision (or are simply stored at a lower precision) on AMD hardware.

On my old Nvidia geforce (and even on the IntelHD 4000) i've never experienced those issues.

The Blocks are simple Qubes (only 8 vertices) with a lot of uv-repeats along all axis.

I could subdivide those blocks in order to increase precision of the uv-coordinates, the problem is that

1) i would need to subdivide the geometry A LOT which can cripple performance

2) It doesn't really solve the problem as even with a lot of subdivisions i wasn't able to get rid of it completely...

3) it also seems to depend on the distance of the texture to the camera. Even with a lot of subdivices, if you look at this texture from far away, it could still lead to this judder.

Of course, all this leads to precision errors if you go above a certain threshold. The problem is that on AMD hardware, those issues crop up way sooner than they should.

Neither my old Geforce nor my IntelHD have the same issues.

I made a little example project with a simple shader which should showcase this issue.

Compiled testproject

Source of testproject (.gmz)

If you have the time, donwload the compiled testproject and run it. As far as i was able to test, Nvidia GPUs have no issues whatsoever while AMD GPUs do experience precision issues. (Tested this on two RX 480s and one Radeon 7xxxx model.)

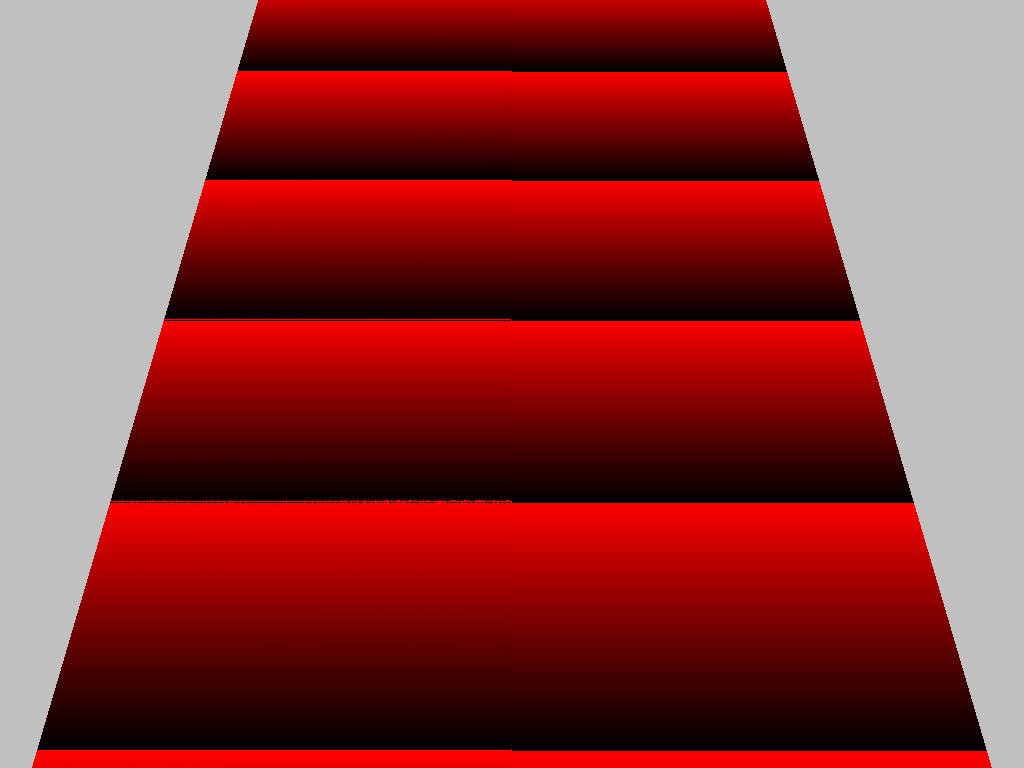

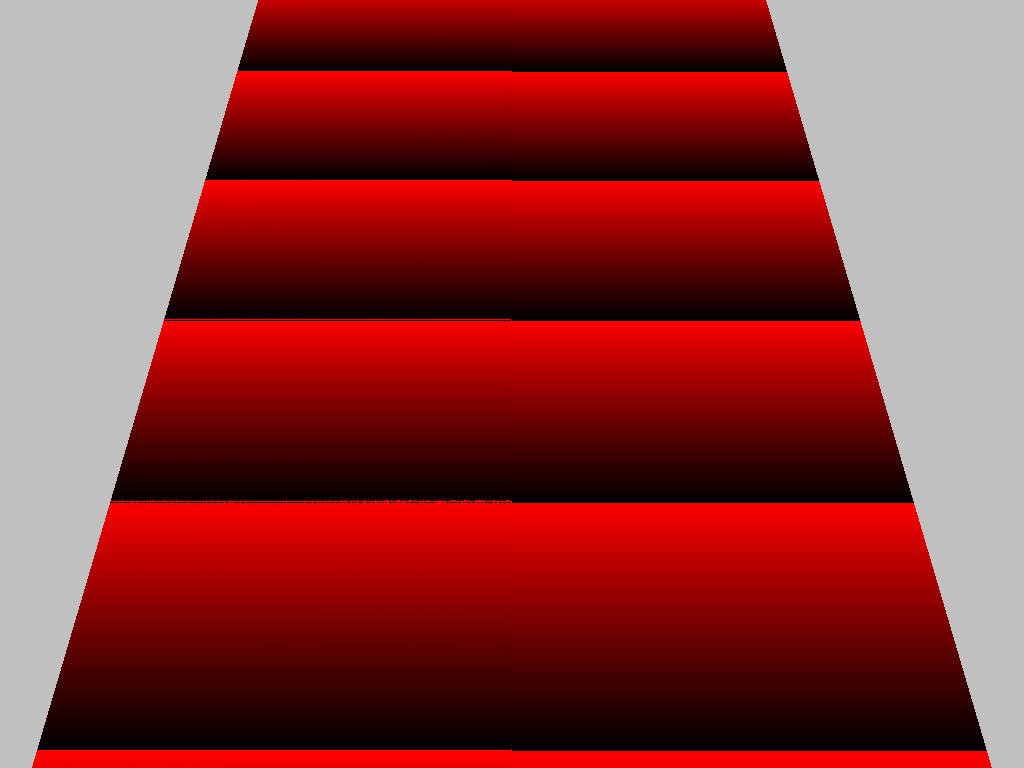

Here is how it looks like on my AMD GPU

Notice how the jump from ret do black isn't really pixel perfect. Instead you have this "judder" which occurs if you move the camera (due to precision issues.)

You can see it way better if you download the example and run it (given that you have an AMD GPU.)

Again, i suspect that something with the shader execution on AMD GPUs isn't quite right (are they using less bits to store floating point variables compared to Nvidia and Intel?). I spent the last 4 days debugging and searching for a solution online without success.

So maybe some of you have an idea as to what could be causing this problem (and how to fix it.)

Now, my old PC build was using an Nvidia GPU (Geforce 9600GT with 512 MB GDDR3 Vram)

So for the last 3 years i was working on my project and (pretty much) exclusively testing it on my Nvidia GPU.

I was also able to test the game on my laptop which had an IntelHD GPU.

None of my rigs had an AMD GPU. Until now.

My current PC build has a Radeon RX 480. And i noticed a very big issue with it.

I'm not sure why this is the case, but shaders seem to calculate values at a lower precision (in particular in the fragment shader) on AMD hardware.

I made a video showcasing this issue:

Now, my suspicion is that the variables which are passed from the vertex to the fragment shader are being interpolated at a lower precision (or are simply stored at a lower precision) on AMD hardware.

On my old Nvidia geforce (and even on the IntelHD 4000) i've never experienced those issues.

The Blocks are simple Qubes (only 8 vertices) with a lot of uv-repeats along all axis.

I could subdivide those blocks in order to increase precision of the uv-coordinates, the problem is that

1) i would need to subdivide the geometry A LOT which can cripple performance

2) It doesn't really solve the problem as even with a lot of subdivisions i wasn't able to get rid of it completely...

3) it also seems to depend on the distance of the texture to the camera. Even with a lot of subdivices, if you look at this texture from far away, it could still lead to this judder.

Of course, all this leads to precision errors if you go above a certain threshold. The problem is that on AMD hardware, those issues crop up way sooner than they should.

Neither my old Geforce nor my IntelHD have the same issues.

I made a little example project with a simple shader which should showcase this issue.

Compiled testproject

Source of testproject (.gmz)

If you have the time, donwload the compiled testproject and run it. As far as i was able to test, Nvidia GPUs have no issues whatsoever while AMD GPUs do experience precision issues. (Tested this on two RX 480s and one Radeon 7xxxx model.)

Here is how it looks like on my AMD GPU

Notice how the jump from ret do black isn't really pixel perfect. Instead you have this "judder" which occurs if you move the camera (due to precision issues.)

You can see it way better if you download the example and run it (given that you have an AMD GPU.)

Again, i suspect that something with the shader execution on AMD GPUs isn't quite right (are they using less bits to store floating point variables compared to Nvidia and Intel?). I spent the last 4 days debugging and searching for a solution online without success.

So maybe some of you have an idea as to what could be causing this problem (and how to fix it.)

Last edited: